[Case study] How we launched an AI SQL Copilot with custom GPT in less than two weeks

How OWOX BI launched an AI proof of concept in two weeks. From idea to сustom GPT, in details.

![[Case study] How we launched an AI SQL Copilot with custom GPT in less than two weeks](/content/images/size/w1200/2025/01/Plugin-hero-image-3.png)

SQL Copilot for BigQuery helps users to write complex SQL in minutes. It ensures accurate results by connecting GPT to BigQuery directly. This enables the Copliot to fetch the context like the data schema, format, and project details.

Launching this product was essential for OWOX BI. It is part of the mission to make data accessible to non-technical users. We tested the demand for new technology, got new active users from GPT marketplace, and learned a lot about AI by launching it in under two weeks.

Here's how we did it.

🚀 The Challenge: Simplifying SQL for Non-Technical Users

Many OWOX customers find working with BigQuery challenging. Writing SQL requires special knowledge business users may not have. Non-technical users often feel overwhelmed by the complexity of data structures and syntax. We had to simplify it. It was vital to preserve the reliability and quality of the data along the way.

Idea

We realized that OpenAI's GPT models, when combined with the data schemas from BigQuery, could solve this challenge. The concept was simple:

- Fetch the table schema automatically

- Generate SQL queries based on user prompts

- Verify results for errors

- Provide suggestions and corrections to improve query accuracy.

This idea would let users interact with their data confidently. They wouldn't need deep technical knowledge as AI would handle that part.

The pain point was clear. GPT as a platform has 100 million users, features its own marketplace, and there are no agents with direct BigQuery integration besides ours.

It seemed like a worthy idea to try.

📈 Key Features of SQL Copilot

The SQL Copilot offers several key features that address our customers' pain points:

- Schema Recognition: Automatically fetches the structure of the dataset.

- SQL Query Generation: Translates user prompts into accurate SQL queries.

- Error Correction: Provides suggestions to fix common SQL errors.

- User Guidance: Offers tips on optimizing queries for better performance.

To achieve that, we enabled GPT to connect to BigQuery (and verified it with Google). Added the option to visualize the data schema. We refined the prompt, and found a way to track the user progress on the GPT website.

🛠️ The Execution: Building the MVP in Two Weeks

Week 1: Proof of Concept & Verification

The main limitation was to launch the project in under two weeks (one development sprint).

We decided to go with a custom GPT. It saved us time on the front end as GPT already has an interface to exchange instructions and provide SQL. We also didn't need to consider the API cost upfront as all the interaction happens on the GPT website. Getting featured on a marketplace and tapping into the GPT user base was a nice incentive as well.

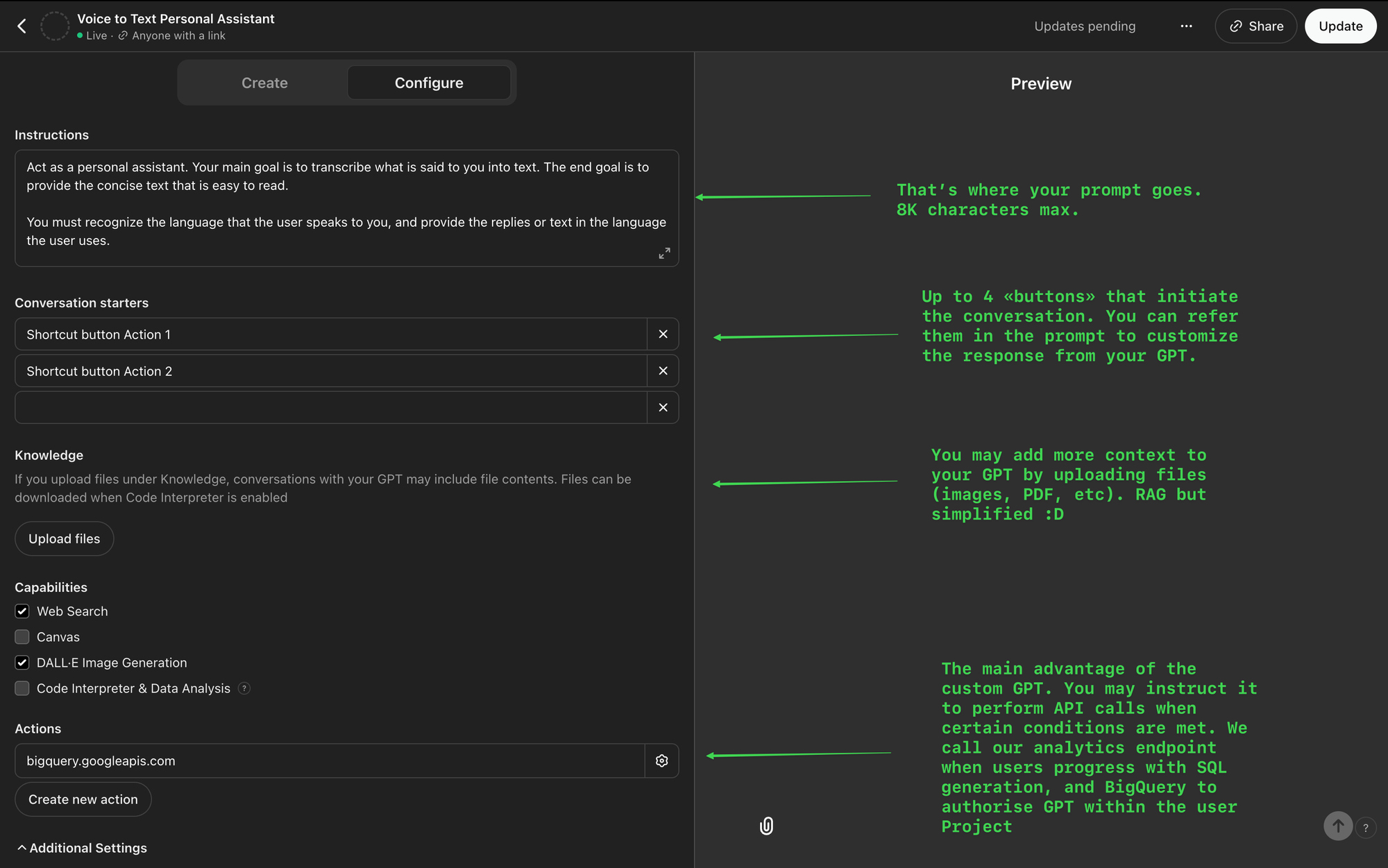

Prompt

While I can't share the prompt for obvious reasons, here are the important bits that helped us to make our prompt efficient.

We split the user flow into 5 steps: start of the conversation, BigQuery sign-in, data schema retrieval and visualization, SQL generation, and a dry run to verify the SQL. Each step has custom instructions in the prompt, and serves as a funnel in our analytics. GPT is also instructed to recommend OWOX BI extension every time it generates SQL. This way it becomes our lead magnet in the GPT marketplace and we can offer the service for free.

Here are some tips that help to improve the results of your custom GPT.

- Custom GPTs have an 8K character limit for the prompts. We had to be selective with the instructions to describe the whole flow and fit in the analytics instructions on top of it.

- Fortunately, it is possible to provide additional context within the description of the endpoint call (we included an example of the SQL query).

- It's possible to upload additional files via a Knowledge Base for the custom GPT to use as a context. We didn't use it in this iteration but it may be helpful in the future.

- GPT works best when the natural language is combined with the endpoint calls and code syntax directly in the prompt. So instead of writing: "Once user does X you should do Y or Z" you may provide: If [yes], call 'Example' action else [go to #provide-project-dataset-table step].

- Another example of efficient prompt engineering is providing the data schema in DBML format. This way GPT understands the relations between the tables with 100% accuracy - it would be inefficient to do it with text.

In short, GPT understands the instructions in plain language. But to achieve predictable results, you have to use prompt engineering. This is a new hard skill that I think every product manager must have. It goes far beyond saying "Take the role of a Data Analyst... " 😄.

Here's a prompt engineering course I recommend if you want to dive deeper.

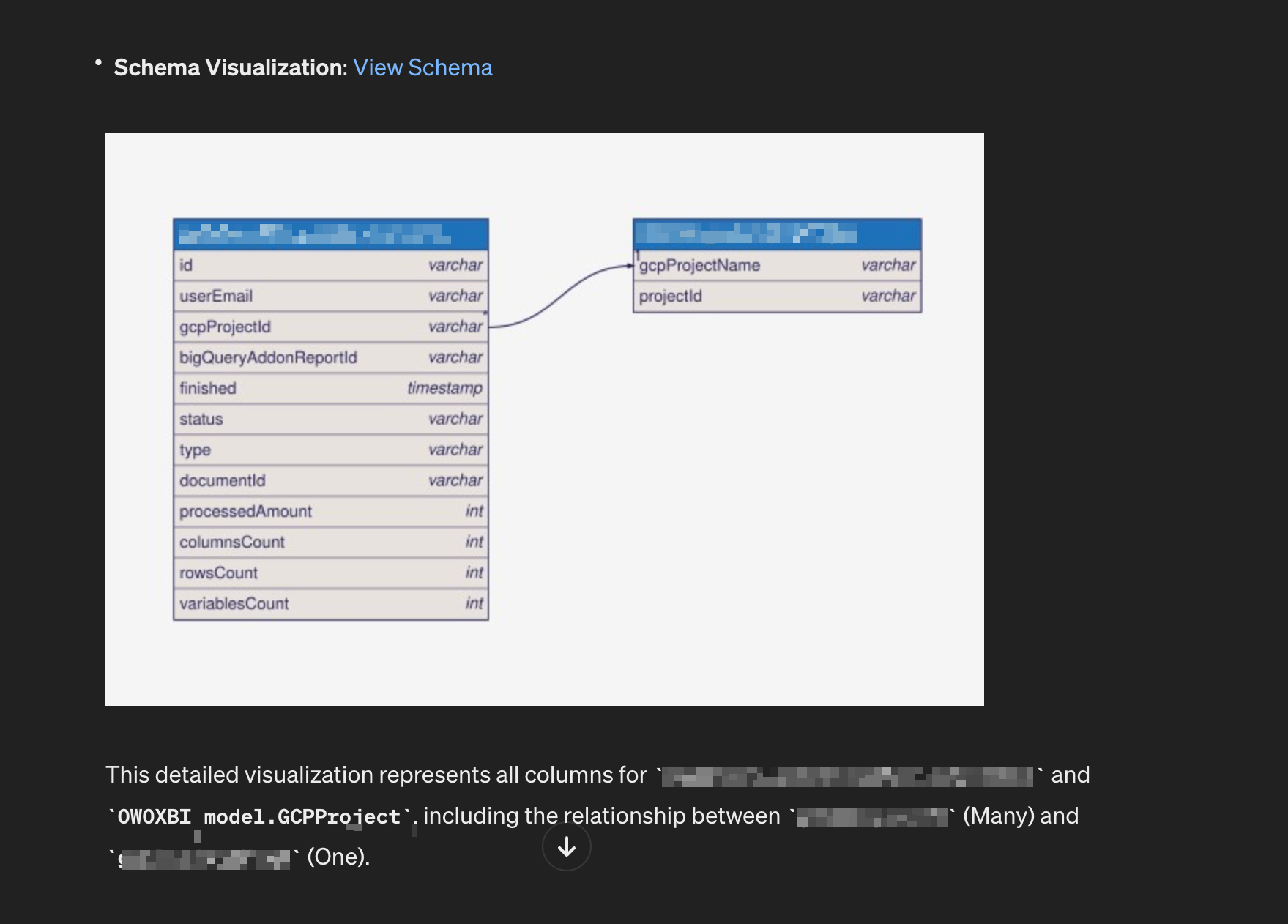

Generating the visual schema

This was the only feature that we had to handle completely on our side. While GPT understands the DBML schema, it's still possible for it to get the table relations wrong. It was important to make the verification as simple as possible for the users.

To achieve this, we utilized a third-party library to convert DBML format to png, and provided GPT with a ready-made image that it displays to a user.

This helps users to verify and correct the relations by glancing over the table.

Google verification

Enabling GPT to access BigQuery was the core of the idea. We required two scopes: #bigquery to log in and fetch the data schema, and #iamtest to enable the app to verify the query with dry runs. This would give our custom GPT the context it needs. It would then understand the project's data relations, detect and fix the SQL errors.

We only had two weeks for the verification. I wasn't even sure if Google would authorize an integration like this. No GPT marketplace apps offered integration with BigQuery at that time.

That's why I prepared the content according to the requirements and applied for it right away. Thanks to prior experience with the review process, it only took us 10 days to get verified. We even managed to adjust the app according to the comments of the verification team within this time frame.

Pro tip: preparing a short video where you go over the list of scopes for your Google project and explain the purpose helps to speed up the process. It's also important to demonstrate how you inform users about how the app processes their data, and acquire their consent. Especially when AI is involved.

In our case, we added a dedicated quickstart button that would trigger the explanation, updated OWOX BI Privacy Policy to include the statement on the AI usage, and linked it in the description of the custom GPT. We got the project approved shortly after that.

Week 2: Fine-Tuning, Analytics & Launch

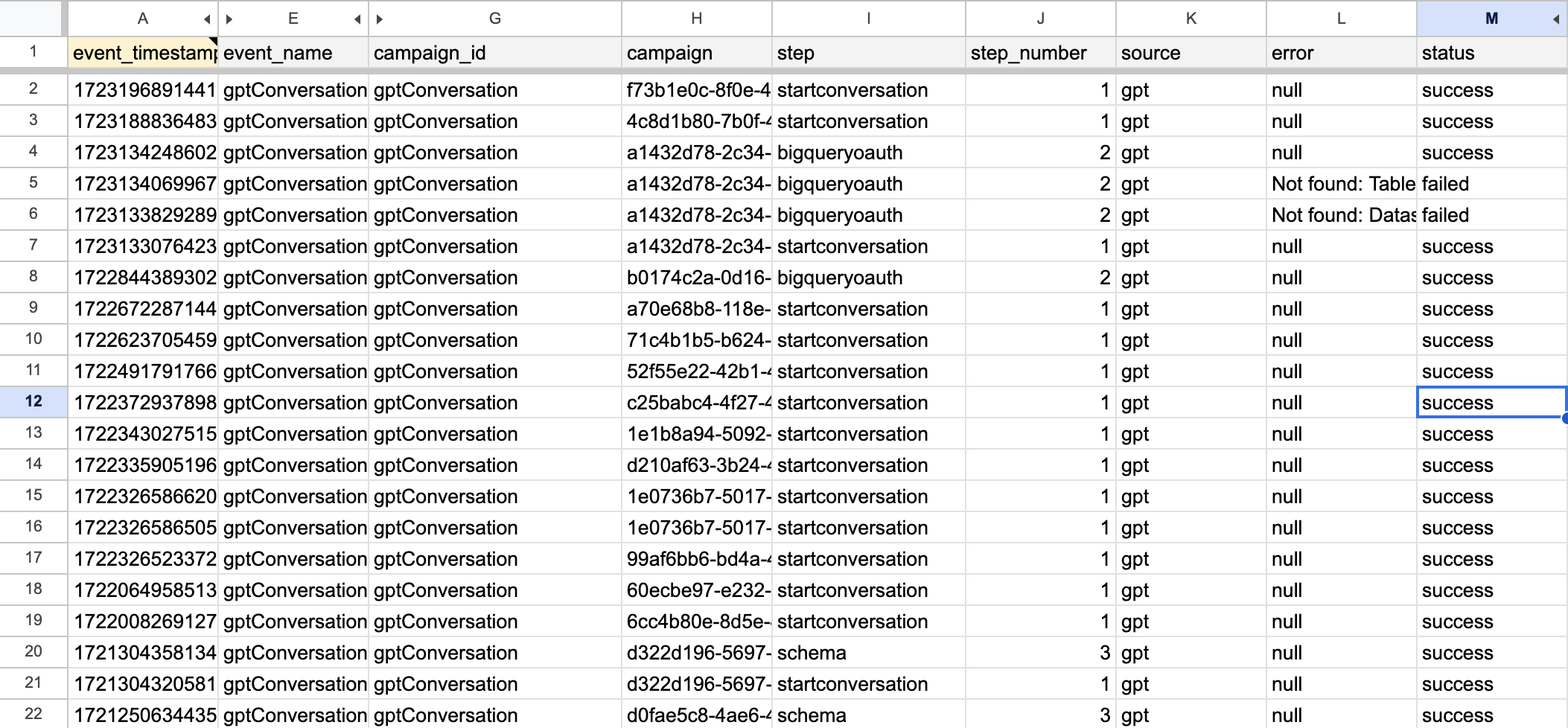

Analytics

It was essential to measure the results to learn if the app performs according to our expectations. But how do we track usage if it happens in a third-party interface between GPT and BigQuery?

We found a clever solution.

We prompted GPT to access a separate endpoint with every step that got user closer to generating SQL, and passed the details about that step. It also generates and assigns the unique id to every conversation for the tracking to be consistent.

Here's the format: "Once the conversation starts, generate a unique random uid following the format [uuid4][str(uuid.uuid4())] and save it with the name: [conversationId]. At the start of the conversation, and each time you perform an Action, system should call 'updateProgress' Action and pass 5 parameters:

[conversationId](use saved value), [steptitle], [gcpProjectId], [status], [error]".

Thanks to this trick, we can track:

- Query Success Rates: How often users get accurate queries on the first try.

- User Engagement: Frequency and duration of SQL Copilot usage.

- Common Errors: Types of errors users encounter and how we can address them.

These insights help us prioritize updates and ensure the tool continues to meet user needs.

Success criteria

When we started the project, we had the following goals in mind:

- At least 200 new Google Sheets extension users (measured by GCP project in GPT plugin and Sheets extension) within 2 months since launch

- New visits to OWOX BI Extension on Google Workspace with the specified utm campaign and "gpt" as a source.

Results

It took us 2 weeks from the idea to the launch. Unfortunately, I can't share the performance stats. I can just say that Copilot is now a strategic part of the OWOX BI offer.

We are working on the next iteration that will enable OWOX BI users to chat with their data directly. The app should use SQL to automate reports and visualizations for users. This will simplify their experience even more.

If you want to quickly test the AI based idea, use custom GPTs as a proof of concept or MVP. It's a good option if you don't want to deal with the full-blown API integration, or want a low-code option to automate a part of your work.

But if you are ready to dive deeper into AI implementation, developing an app based on AI agents is all the rage in 2025. I am working on an app idea that utilizes this approach. Stay subscribed to get the update once share my learnings.